A Brief History of Coding

An epic saga covering thousands of years of discovery!

Coding might seem like a modern phenomenon, but it has roots in computing processes that date back to the 1800s, WW1, and even ancient Sumeria.

Abacus

The earliest known computing device is probably the abacus, which dates back at least to 1100 BCE. Often called the world’s first calculator, the abacus—which is still in use today—is the precursor to modern binary systems that power computers.

Ada Lovelace

Born in 1815, Ada Lovelace studied mathematics, which at the time was highly unusual for a woman. In 1843, Ada published an article about Charles Babbage’s Analytical Engine, adding in her own extensive notes. She indicated that when the machine is fed a sequence of operations, with the help of signs and numbers, it could be competently used to solve various mathematical problems—in other words, she created the first algorithm intended to be processed by a machine!

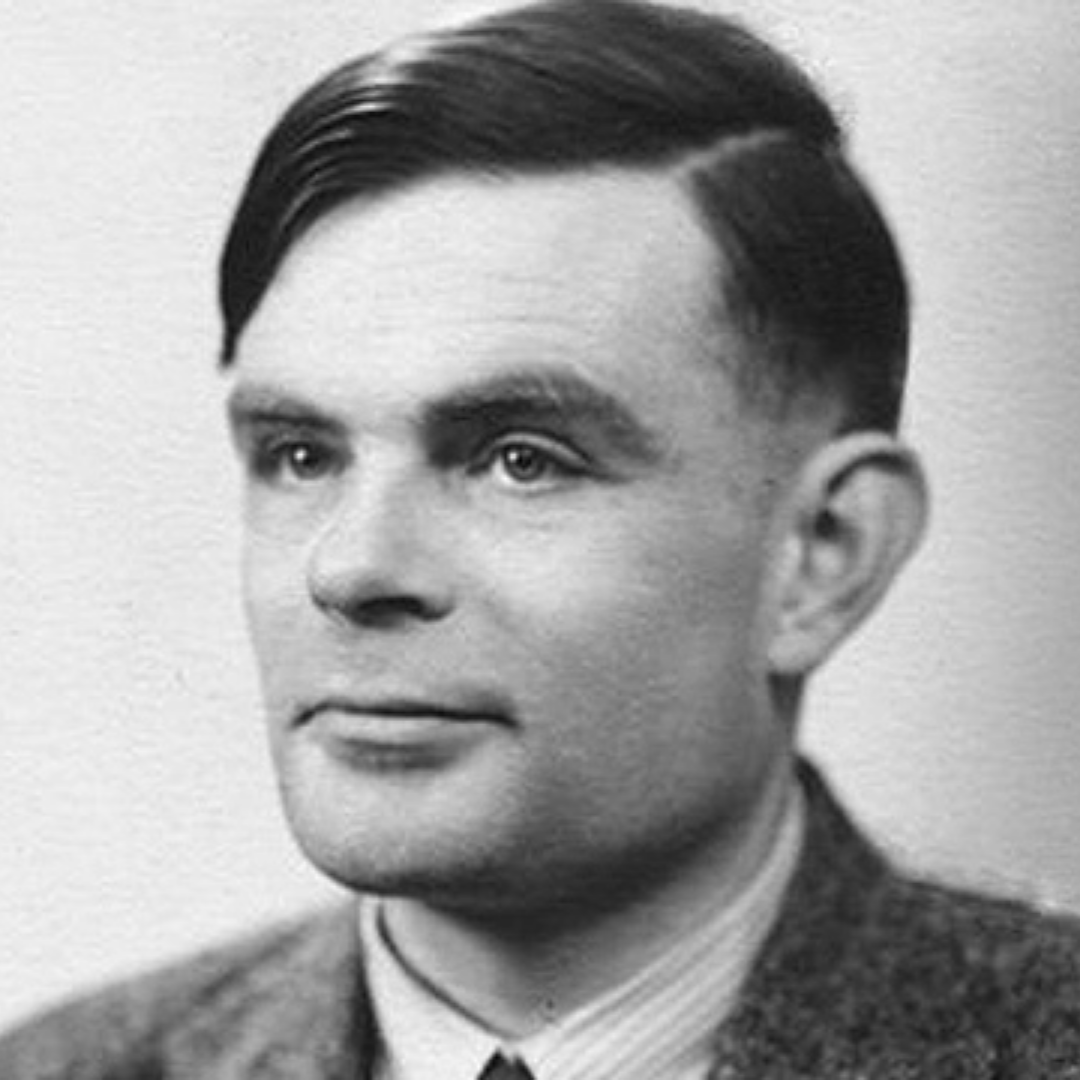

Alan Turing

Fast forward to the 1930s: Alan Turing and his computer, The Bombe, made the next major advancement in the world of coding. During WW1, Germans began to communicate using secret encrypted messages with the help of their famous machine, The Enigma. The British government hired Turing to crack the Enigma, so that they could surveil German communications. Speeding past the tedious process of manual code-breaking, Turing invented a machine (called the Bombe) that was so adept at deciphering the so-called unbreakable German codes that historians now say Turing effectively shortened the war by as much as four years.

From this point, Turing developed a more flexible, advanced machine known as the “Advanced Computing Engine,” which was arguably the first computer language used and launched the beginning of the modern programming world.

Development of modern programming languages

The 1950s saw an explosion of modern coding languages that are still in use today. FORTRAN, generally a common feature of the world’s fastest supercomputers, was developed by a team at IBM in 1954. Another coding language that burst into being during this period was ALGOL, which is said to be the mother language of many modern-day languages including C++, Python, and JavaScript.

Golden age of development

The 1980s are often referred to as the golden age of development for coding — and with good reason! Throughout the 80s, the world witnessed the invention of groundbreaking coding languages like C++ and PERL, languages that power modern tools like Google Chrome, Adobe, and Amazon. And of course, in the late 80s, the Internet came roaring onto the scene, along with web-based programming languages such as HTML.

The future: AI, machine learning, and more

In the future, coding might look much more like a conversation. Developments in artificial intelligence and machine learning could mean everyone from software engineers to businesspeople can increasingly use AI tools to program without needing to spend months perfecting complicated coding languages like Python or C++. Of course, even with AI tools assisting coders, a fundamental understanding of the principles of code will still be a big advantage!

Want to prepare your child to make their contribution to the history of coding? Sign up for our coding classes today.